SIGLuxen#

Scene Integrated Generation for Neural Radiance Fields

Generatively edits Luxen scenes in a controlled and fast manner.

SIGLuxen allows for generative 3D scene editing. We present a novel approach to combine Luxens as scene representation with the image diffusion model StableDiffusion to allow fast and controlled 3D generation.

Installation#

Install luxenstudio dependencies. Then run:

pip install git+https://github.com/cgtuebingen/SIGLuxen

SIGLuxen requires to use Stable Diffusion Web UI. For detailed installation information follow our installation guide.

Running SIGLuxen#

Details for running SIGLuxen can be found here. Once installed, run:

ns-train sigluxen --help

Two variants of SIGLuxen are provided:

Method |

Description |

Time |

Quality |

|---|---|---|---|

|

Full model, used in paper |

40min |

Best |

|

Faster model with Luxenacto |

20min |

Good |

For more information on hardware requirements and training times, please refer to the training section.

Interface#

SIGLuxen fully integrates into the Luxenstudio viser interface. It allows for easy editing of Luxen scenes with a few simple clicks. The user can select the editing method, the region to edit, and the object to insert. The reference sheet can be previewed and the Luxen is fine-tuned on the edited images. If you are interested in how we fully edit the Luxenstudio interface please find our code here.

Method#

Overview#

SIGLuxen is a novel approach for fast and controllable Luxen scene editing and scene-integrated object generation. We introduce a new generative update strategy that ensures 3D consistency across the edited images, without requiring iterative optimization. We find that depth-conditioned diffusion models inherently possess the capability to generate 3D consistent views by requesting a grid of images instead of single views. Based on these insights, we introduce a multi-view reference sheet of modified images. Our method updates an image collection consistently based on the reference sheet and refines the original Luxen with the newly generated image set in one go. By exploiting the depth conditioning mechanism of the image diffusion model, we gain fine control over the spatial location of the edit and enforce shape guidance by a selected region or an external mesh.

For an in-depth visual explanation and our results please watch our videos or read our paper.

Pipeline#

We leverage the strengths of ControlNet, a depth condition image diffusion model, to edit an existing Luxen scene. We do so with a few simple steps in a single forward pass:

We start with an original Luxen scene and select an editing method / region

For object generation, we place a mesh object into the scene

And control the precise location and shape of the edit

We position reference cameras in the scene

Render the corresponding color, depth, and mask images, and arrange them into image grids

These grids are used to generate the reference sheet with conditioned image diffusion

Generate new edited images consistent with the reference sheet by leveraging an inpainting mask.

Repeat step (6) for all cameras

Finally, the Luxen is fine-tuned on the edited images

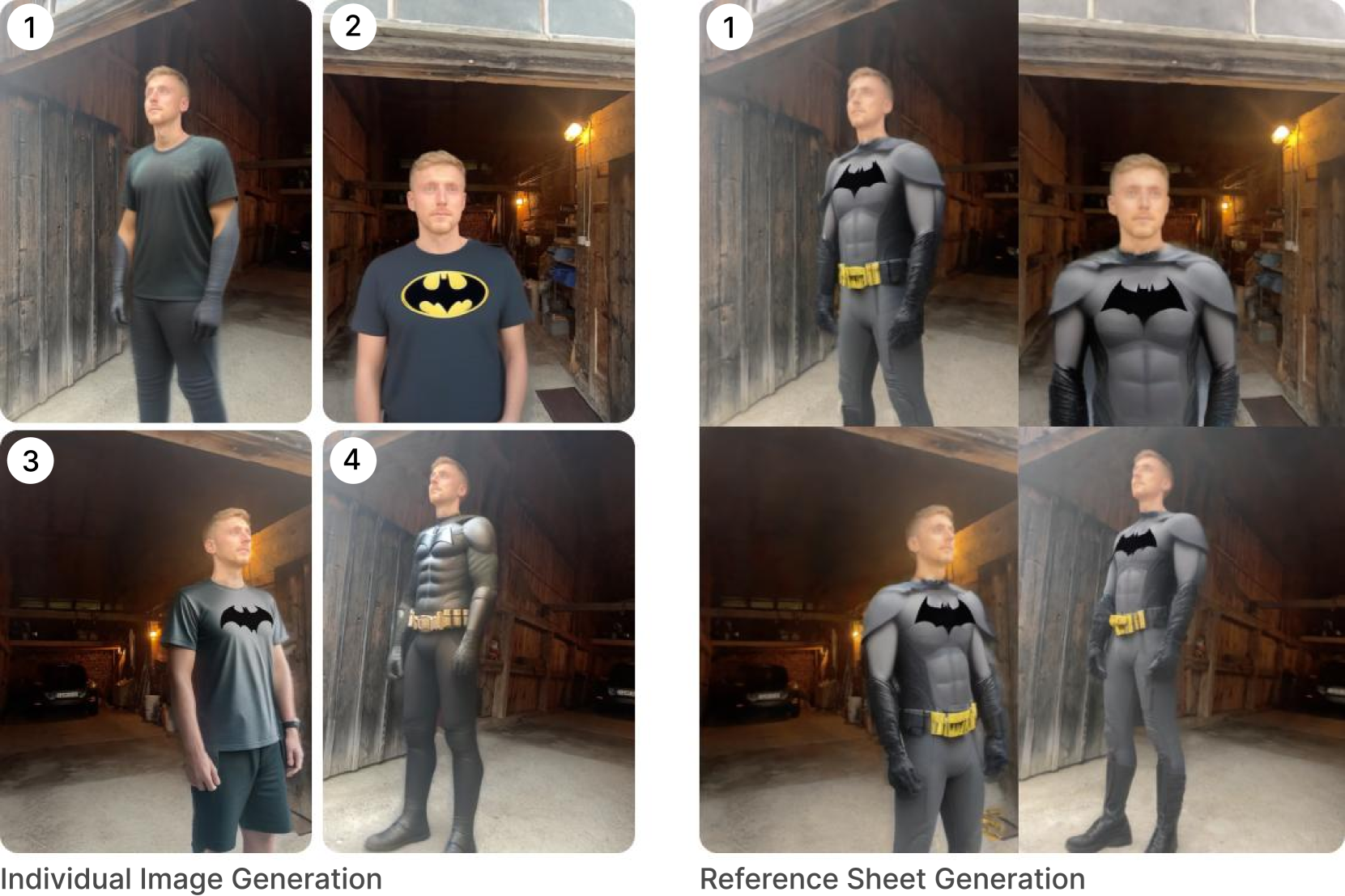

Reference Sheet Generation#

SIGLuxen uses a novel technique called reference sheet generation. We observe that the image diffusion model ControlNet can already generate multiview consistent images of a scene without the need for iterative refinement like Instruct-Luxen2Luxen. While generating individual views sequentially introduces too much variation to integrate them into a consistent 3D model, arranging them in a grid of images that are processed by ControlNet in one pass significantly improves the multi-view consistency. Based on the depth maps rendered from the original Luxen scene we employ a depth-conditioned inpainting variant of ControlNet to generate such a reference sheet of the edited scene. A mask specifies the scene region where the generation should occur. This step gives a lot of control to the user. Different appearances can be produced by generating reference sheets with different seeds or prompts. The one sheet finally selected will directly determine the look of the final 3D scene.

If you want to learn more about the method, please read our paper or read the breakdown of the method in the Radiance Fields article.